What are you trying to achieve?

- 0 Posts

- 54 Comments

131·3 months ago

131·3 months agoIt’s kind of horrifying nobody thought that through. What else did they fail to think about?

5·3 months ago

5·3 months agoTotally agree. Have been there and done that quite a few times too.

2·4 months ago

2·4 months agohides

7·4 months ago

7·4 months agoLoad average of 400???

You could install systat (or similar) and use output from sar to watch for thresholds and reboot if exceeded.

The upside of doing this is you may also be able to narrow down what is going on, exactly, when this happens, since sar records stats for CPU, memory, disk etc. So you can go back after the fact and you might be able to see if it is just a CPU thing or more than that. (Unless the problem happens instantly rather than gradually increasing).

PS: rather than using cron, you could run a script as a daemon that runs sar at 1 sec intervals.

Another thought is some kind of external watchdog. Curl webpage on server, if delay too long power cycle with smart home outlet? Idk. Just throwing crazy ideas out there.

4·4 months ago

4·4 months agoI think it was sunspots.

10·4 months ago

10·4 months agoHow could Linux do this to you??

(Totally never happened to me /s)

1·4 months ago

1·4 months agoGood to know. Well I have 16G now that should give me plenty to spare.

1·4 months ago

1·4 months agoI will have to try that once my ram upgrade gets here.

I try to help when I can to pay penance for when I was young and an asshole.

6·4 months ago

6·4 months agoI have a couple Lenovo tiny form factors: an M700 8GB w/ J3710 running Pihole on Ubuntu server—which is total overkill in both CPU and mem; and an M73, 4GB w/4th gen i3 running jellyfin server on Mint 21.3. Certain kinds of transcoding brings it to its knees but for most 1080p streaming it’s fine. Memory is a bit tight; 8G would be better. It has a usb3 2T drive for video files that runs more than quick enough. Serial adapters are available if you want to use the console.

The latter has been running for I think a couple years. The former I just set up.

But I’ve been shopping for newer gear for the Jellyfin server. I think you could get a Dell, HP, or Lenovo 6th gen TFF with 8G and 256-512G internal SSD within your price range.

I see some EliteDesk G800 G3 (6th gen Intel) tinys with no disk for $50-70 shipped on eBay in the US. I think those look the coolest by far :)

You could find one with no disk, no ram and config as you please and probably still come in under budget.

E-

My eventual plan for the Jellyfin server is a SFF, probably an HP with enough space to fit a couple of big HDDs, plus 16G ram and a newer CPU that can transcode on the fly without lag.

281·4 months ago

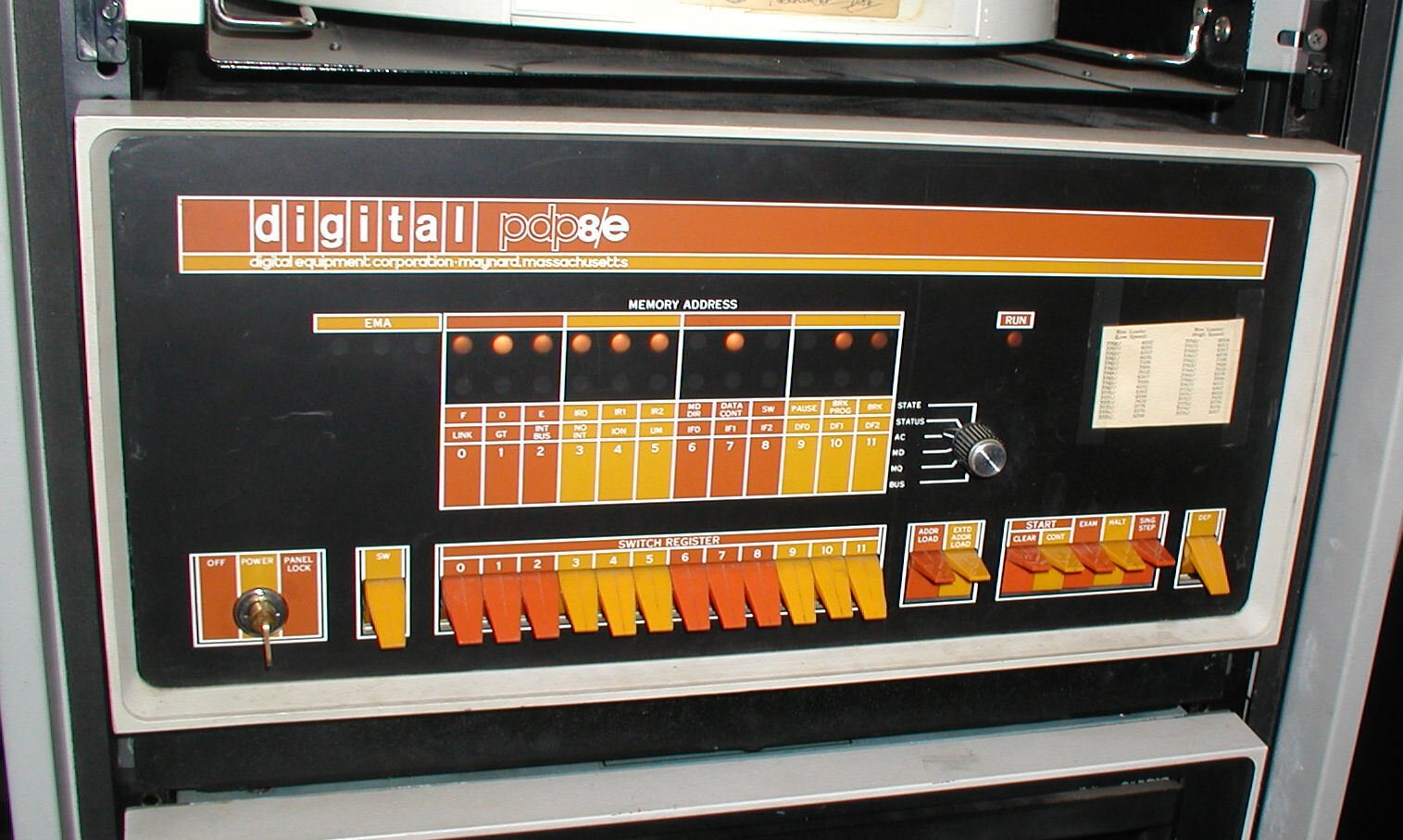

281·4 months agoDoesn’t everyone just boot by entering machine code with the switch panel in the front?

3·4 months ago

3·4 months agoOops :)

16·4 months ago

16·4 months agoIs true.

Fascinating stuff. Obviously a lot has changed since I took an undergrad OS class lol. Hell, Linux didn’t even exist back then.

Timer based interrupts are the foundation of pre-emptive multitasking operating systems.

You set up a timer to run every N milliseconds and generate an interrupt. The interrupt handler, the scheduler, decides what process will run during the next time slice (the time between these interrupts), and handles the task of saving the current process’ state and restoring the next process’ state.

To do that it saves all the CPU registers (incl stack pointer, instruction pointer, etc), updates the state of the process (runnable, running, blocked), and restores the registers for the next process, changes it’s state to running, then exits and the CPU resumes where the next process left off last time it was in a running state.

While it does that switcheroo, it can add how long the previous process was running.

The other thing that can cause a process to change state is when it asks for a resource that will take a while to access. Like waiting for keyboard input. Or reading from the disk. Or waiting for a tcp connection. Long and short of it is the kernel puts the process in a blocked state and waits for the appropriate I/O interrupt to put the process in a runnable state.

Or something along those lines. It’s been ages since I took an OS class and maybe I don’t have the details perfect but hopefully that gives you the gist of it.

Hmm. It’s been a hot minute (ok 30 years) since I learned about this stuff and I don’t know how it works in Linux in excruciating detail. Just the general idea.

So I would be curious to know if I am off base… but I would guess that, since the kernel is in charge of memory allocation, memory mapping, shared libraries, shared memory, and virtualization, that it simply keeps track of all the associated info.

Info includes the virtual memory pages it allocates to a process, which of those pages is mapped to physical memory vs swap, the working set size, mapping of shared memory into process virtual memory, and the memory the kernel has reserved for shared libraries.

I don’t think it is necessary to find out how much is “technically in use” by a process. That seems philosophical.

The job of the OS is all just resource management and whether the systems available physical memory is used up or not. Because if the system spends too much time swapping memory pages in and out, it slows down everything. Shared memory is accounted for correctly in managing all that.

Maybe I am not understanding your point, but the file system is a different resource than memory so a file system cache (like a browser used) has zero impact on or relevance to memory usage as far as resource management goes.

Weird that so many mainstream distros would be unusable out of the box.

“When he reached the New World, Cortezh burned hish ships. Ash a reshult hish men were well motivated.” —Capt. Ramius, played by Sean Connery in The Hunt for Red October